After 5 years I felt I needed to leave CRKN.

I want to provide clarity for the following: While I am critical of some policies, procedures, and work practices that I felt delayed or blocked productive work being done, I am not being critical of individual people. I have noticed over the years that critique of policy is regularly misinterpreted as a critique of a person.

I never knew why in the past, but I have since learned this is a common miscommunication between Autistic and Allistic (non-Autistic) people. The same with the question “why?” being used by Autistic people in their constant desire to learn, while that is apparently a challenge/argument/etc for Allistics.

While the treatment I received from management because I was “Autistic At Work” was the final straw, I felt I was constantly having to fight with the management team to be allowed to do productive work. While there was agreement in theory, in practise there was always pushback against moving away from the DIY (Do It Yourself), NIH (Not Invented Here) attitudes.

I generally did not feel my gifts or contributions were being recognized or harnessed.

Differences in what I was told compared to what actually happened

Early in 2018 two different priorities were set for the small technology team: Archivematica adoption and reducing technological debt.

Managing custom software needs to be thought of as technological debt, so reducing technological debt includes moving away from custom software. Over the past 5 years there was minimal movement on the Archivematica project, and there is now more custom software and more CRKN owned hardware in member data centers than there was in 2018.

I’ll focus on only two specific areas to illustrate the problem.

Archivematica

|

| From a UBC Campus tour, Archivematica Camp |

3 CRKN staff people were sent to Archivematica Camp 2019, but were never able to make use of what was learned.

|

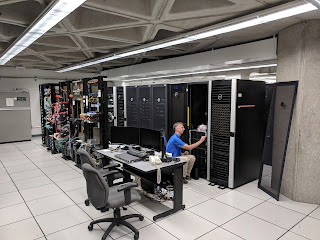

| 2 racks of CRKN servers at UTL Many ScholarsPortal servers a close by. |

Canadiana working with OLRC would have doubled OLRC’s object storage, so this was not a simple client relationship but a partnership. While managing technical services was new for CRKN, organizing broad cross-organizational collaborations and partnerships was exactly what CRKN was known to be good at.

Custom Cataloging Rules

One of the largest areas of push-back against adopting FLOSS community software was the use of custom cataloging rules by Canadiana and later CRKN’s cataloging team.

1978: CIHM

|

| Many drawers of Microfiche... |

Canadian Institute for Historical Microreproductions (CIHM) launched in 1978, and created the CIHM/ICMH Microfiche series.

MARC records were created for this series, using the MARC 490 field to indicate which specific Microfiche in that series was being described.

490$awould say something like “CIHM/ICMH Microfiche series = CIHM/ICMH collection de microfiches”490$vwould say something like “99411” or “no. 99411”

The identifiers were all in the CaOOCIHM namespace (MARC 003 = “CaOOCIHM”) , with MARC 001 indicating “99411” as well.

1999: ECO platform

When the ECO (Early Canadiana Online) platform launched in 1999, the existing Microfiche descriptive records and the existing CIHM data model were used.

That platform was decommissioned in 2012, and decommissioning that older service was one of the earlier projects I was involved in.

2012: CAP (Canadiana Access Platform)

In 2012, new software was launched which had a different data model and used a different schema for identifiers. Canadiana moved beyond offering online access to scanned images from the CIHM Microfiche collection to offering access to other collections as well.

- Identifiers were expanded to have a prefix indicating a depositor. That meant “99411” needed to become “oocihm.99411”. CIHM Numbers were deprecated, and should all have been quickly replaced with complete identifiers.

- Not everything, and not even all the images from the Microfiche collection, would be considered part of the same collection as was the case for CIHM. This meant that CIHM’s way of using the 490 field was deprecated, with the intention being to use that field in the more common way in the future (to describe collections and volumes/issues of those collections).

- We could have used MARC 001 for the full CAP identifier (not CIHM numbers), but we wanted to move from using a transparent identifier in that field to using a machine generated opaque identifier. The purpose of these records was to describe an online resource, so MARC 856 was the obvious choice to put the transparent identifier (within a full URL such as https://www.canadiana.ca/view/oocihm.99411 )

While not ideal, CAP made use of a custom schema inspired by Dublin Core for issues of series called IssueInfo (Issueinfo.xsd ). This is how the CAP system knew the difference between a “Title” (Series record or “Monograph” which could be described using Dublin Core or MARC) and an issue of a series (which must be cataloged using an IssueInfo record).

CAP did not make use of the 490 field, although because there was pushback from the Cataloguing team in using MARC 856 we still needed to support looking for CIHM-era identifiers being stuffed in 490$v when records were being loaded into the databases.

2022: Preservation-Access Split

After years of delay due to pushback and other projects being given priority, this was launched in April 2022.

There were now two independent descriptive metadata databases: one for Preservation and one for Access. The same packaging tools used to manage OAIS packages were used to download as well as update Preservation descriptive metadata records.

In the past, Preservation records needed to match what was needed by Access. This was no longer the case, allowing records to slowly migrate to use the same encoding standards used by Archivematica. Splitting these databases and the identifiers they use was a prerequisite for adopting Archivematica, with Preservation records now needing to be in Dublin Core with an eye towards migrating all custom Canadiana AIPs to Archivematica AIPs.

On the Access side, CAP and the metadatabus were enhanced to support some features of the IIIF data model. Relationships between documents (including whether a document would be displayed to patrons as a monograph, series, or issue of a series) would be encoded within databases using the IIIF data model.

This meant Access descriptive records could now all be in MARC, deprecating both IssueInfo and Dublin Core records. While working with Julienne Pascoe I became very excited by Linked Open Data (LOD), and was following the Bibliographic Framework Initiative (BIBFRAME) closely. While some of Canadiana/CRKN’s developers favored moving all Access records to a custom Dublin Core derived schema, I always favored the LOD aspects of BIBFRAME.

One of the many migration paths was to enhance records using MARC as an intermediary step. The Library of Congress itself set up a project to use FOLIO as part of their transition, with some CRKN staff also becoming interested in FOLIO.

Status of the move away from custom software and custom cataloging?

Moving away from custom software requires cataloging staff to move away from custom cataloging rules, and adopt the Metadata Application Profile (MAP) and data model used by relevant community software. CRKN staff would no longer be creating or imposing their own custom encoding, but working with larger commuities and stakeholders.

Migration away from custom software involves transforming/refining all existing records (using automated processes) away from legacy custom MAPs/models.

- Adopting Archivematica involves dropping the CIHM and CAP encoding rules and adopting the Archivematica encoding rules and data model for Preservation.

- Adopting FOLIO (for records management and publication via OAI-PMH and likely later SPARQL for BIBFRAME) requires dropping the CIHM and CAP encoding rules and adopting the FOLIO encoding rules and data model. The data model is focused on concepts from MARC and BIBFRAME, so this involves migrating all Dublin Core and IssueInfo records to MARC (and encoding document relationships using the FOLIO data model, so series, issues and monographs are understood correctly). CAP’s data model is a small subset of the data model that FOLIO uses, so enhancement of relationship data becomes possible.

- Adopting Blacklight-marc requires either custom software that would have to be maintained indefinitely, or adopting MARC for all searchable records (easily sourced from FOLIO using OAI-PMH for indexing).

As of my last day in May 2023:

- The cataloguing team were still treating the CIHM encoding rules and data model (deprecated in 2012) as current.

- "Updates" to descriptive metadata records were being sourced from a different database (Some from spreadsheets, some from Inmagic DB/TextWorks databases using CIHM era schemas, and only containing a subset of records) rather than from the Preservation or Access metadata databases.

- This meant any changes made directly to the Preservation or Access metaedata databases were being overwritten.

- A very old problem: "Document A" is edited to become "Document B" which is then edited to become "Document C". Then someone comes along and edits "Document A" to create "Document D", meaning all the changes made for B and C are lost.

No comments:

Post a Comment